Kubernetes (K8s) is a powerful tool for managing containerized applications, but it can also lead to a lot of cloud waste if not used properly.

One of the leading causes of cloud waste in K8s is over-provisioning, where resources allocated to a pod or deployment are more than what the application needs. This can result in wasted resources and higher costs.

Another cause of cloud waste is inefficient scaling, which leads to too many pods being created or deleted, resulting in wasted resources.

Additionally, a lack of monitoring can make identifying and addressing wasted resources challenging. Unused resources can also contribute to cloud waste if pods are deleted or terminated, and the resources allocated are not automatically released, leading to idle resources.

Following best practices, implementing proper resource management policies, monitoring and scaling rules, and good governance is essential to prevent the above issues. These steps make it possible to reduce cloud waste and lower K8s costs.

Download the ultimate K8s cost management ebook

Why is waste detection in Kubernetes so challenging?

Detection of waste can be difficult because of the dynamic and transient nature of containerized workloads. Containers can be created, scaled, and frequently deleted, making it hard to track resource usage and identify underutilized resources accurately.

Additionally, many Kubernetes clusters are composed of multiple clusters, namespaces, and services, making it difficult to get a holistic view of resource usage across the entire cluster. Finally, many different types of resources can be wasted in a Kubernetes cluster, such as CPU, memory, and storage, which need to be monitored and analyzed separately.

How can cost management solutions help?

There are several solutions in the market that can help mitigate the situation in a few different ways — mainly resource optimization and auto-scaling.

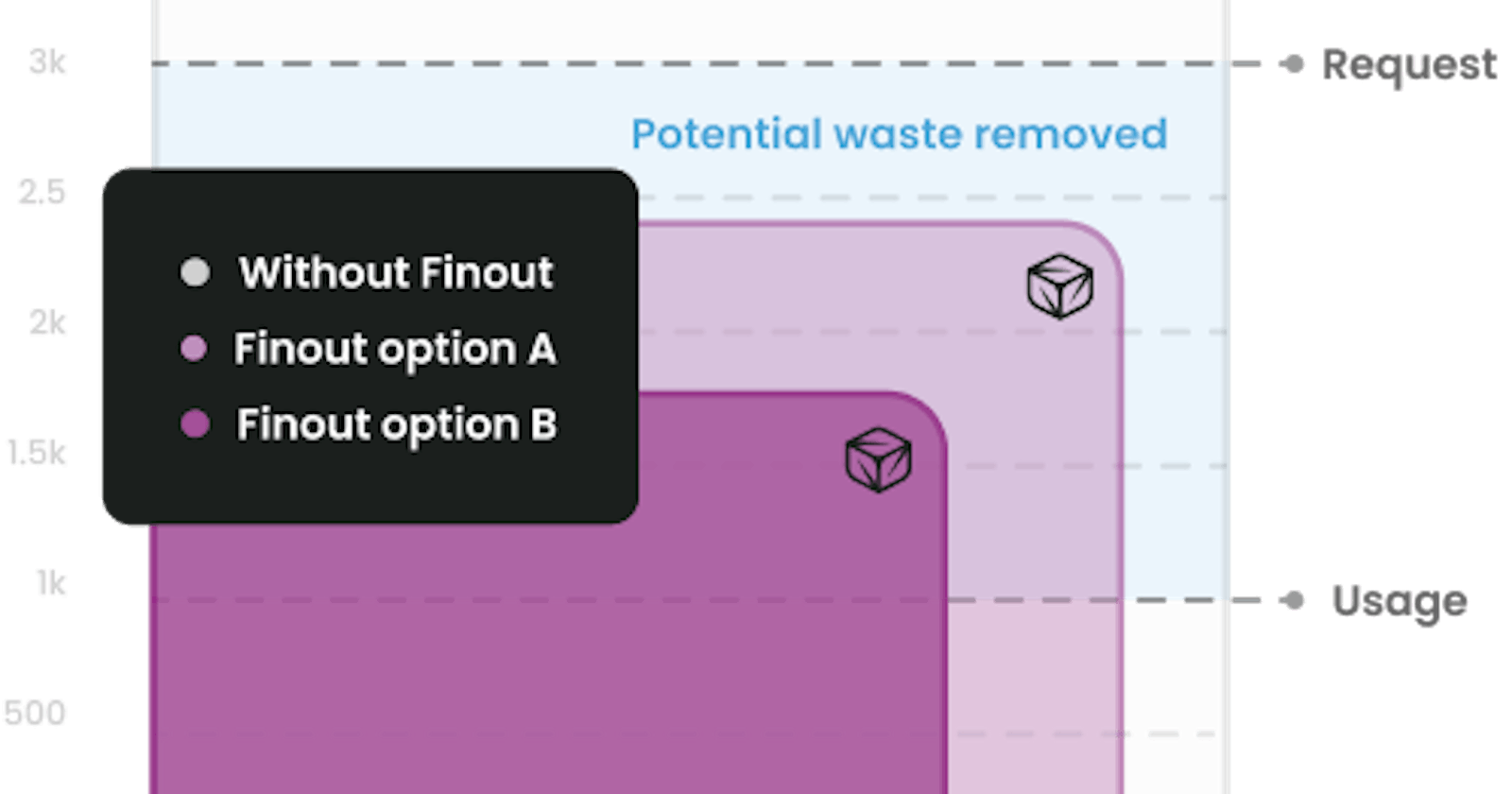

These tools can provide insights into the resources used by the pods and nodes, and make recommendations for optimizing their usage. This can include identifying over-provisioned resources and making suggestions for how to reduce them or automatically scale the number of pods based on the usage of resources, reducing the risk of wasted resources due to inefficient scaling.

Where does Finout fit in the picture?

With Finout, you can get complete visibility into your K8s spend (down to the pod level), without installing an agent into your cluster.

The tool will break down your costs by each individual service, such as Kubernetes namespaces, external databases, storage blocks, and other resources. It also makes it easy to track usage metrics so you can spot underutilized services and configure autoscaling where it's most needed.

Finout also groups all your cloud costs into a MegaBill, giving you an overview of your infrastructure spending. This includes services such AWS, GCP, Snowflake, and K8s.

Giving DevOps & FinOps teams the tools they need to tackle waste

By adding holistic Kubernetes support into our CostGuard solution, our team helped solve one of the biggest, most time-taxing challenges DevOps & FinOps teams confront.

Finout automatically scans and identifies waste for all K8s resources, such as

deployment, namespace, cronjob, and pod labels, helping you calculate the spend value of changing the request level from day one. All without paying more and adding an agent. And we're doing it across all cloud providers (AKS, GKE & EKS).

Final thoughts

As Finout grows both in scope and depth, it's significant for us to tackle the industry's main points head-on.

Helping our customers quickly reduce their Kubernetes waste, saving costs, and improving sustainability was a major milestone for us.

I invite you to give Finout a test ride and see how much you can save on your cloud costs from day one.